Line Segment Detection

Line segment detection is the process of identifying straight lines in an image or video.

Papers and Code

D3R-Net: Dual-Domain Denoising Reconstruction Network for Robust Industrial Anomaly Detection

Jan 27, 2026Unsupervised anomaly detection (UAD) is a key ingredient of automated visual inspection in modern manufacturing. The reconstruction-based methods appeal because they have basic architectural design and they process data quickly but they produce oversmoothed results for high-frequency details. As a result, subtle defects are partially reconstructed rather than highlighted, which limits segmentation accuracy. We build on this line of work and introduce D3R-Net, a Dual-Domain Denoising Reconstruction framework that couples a self-supervised 'healing' task with frequency-aware regularization. During training, the network receives synthetically corrupted normal images and is asked to reconstruct the clean targets, which prevents trivial identity mapping and pushes the model to learn the manifold of defect-free textures. In addition to the spatial mean squared error, we employ a Fast Fourier Transform (FFT) magnitude loss that encourages consistency in the frequency domain. The implementation also allows an optional structural similarity (SSIM) term, which we study in an ablation. On the MVTec AD Hazelnut benchmark, D3R-Net with the FFT loss improves localization consistency over a spatial-only baseline: PRO AUC increases from 0.603 to 0.687, while image-level ROC AUC remains robust. Evaluated across fifteen MVTec categories, the FFT variant raises the average pixel ROC AUC from 0.733 to 0.751 and PRO AUC from 0.417 to 0.468 compared to the MSE-only baseline, at roughly 20 FPS on a single GPU. The network is trained from scratch and uses a lightweight convolutional autoencoder backbone, providing a practical alternative to heavy pre-trained feature embedding methods.

Co-PLNet: A Collaborative Point-Line Network for Prompt-Guided Wireframe Parsing

Jan 26, 2026Wireframe parsing aims to recover line segments and their junctions to form a structured geometric representation useful for downstream tasks such as Simultaneous Localization and Mapping (SLAM). Existing methods predict lines and junctions separately and reconcile them post-hoc, causing mismatches and reduced robustness. We present Co-PLNet, a point-line collaborative framework that exchanges spatial cues between the two tasks, where early detections are converted into spatial prompts via a Point-Line Prompt Encoder (PLP-Encoder), which encodes geometric attributes into compact and spatially aligned maps. A Cross-Guidance Line Decoder (CGL-Decoder) then refines predictions with sparse attention conditioned on complementary prompts, enforcing point-line consistency and efficiency. Experiments on Wireframe and YorkUrban show consistent improvements in accuracy and robustness, together with favorable real-time efficiency, demonstrating our effectiveness for structured geometry perception.

Detecting 3D Line Segments for 6DoF Pose Estimation with Limited Data

Jan 17, 2026The task of 6DoF object pose estimation is one of the fundamental problems of 3D vision with many practical applications such as industrial automation. Traditional deep learning approaches for this task often require extensive training data or CAD models, limiting their application in real-world industrial settings where data is scarce and object instances vary. We propose a novel method for 6DoF pose estimation focused specifically on bins used in industrial settings. We exploit the cuboid geometry of bins by first detecting intermediate 3D line segments corresponding to their top edges. Our approach extends the 2D line segment detection network LeTR to operate on structured point cloud data. The detected 3D line segments are then processed using a simple geometric procedure to robustly determine the bin's 6DoF pose. To evaluate our method, we extend an existing dataset with a newly collected and annotated dataset, which we make publicly available. We show that incorporating synthetic training data significantly improves pose estimation accuracy on real scans. Moreover, we show that our method significantly outperforms current state-of-the-art 6DoF pose estimation methods in terms of the pose accuracy (3 cm translation error, 8.2$^\circ$ rotation error) while not requiring instance-specific CAD models during inference.

TACK Tunnel Data (TTD): A Benchmark Dataset for Deep Learning-Based Defect Detection in Tunnels

Dec 16, 2025

Tunnels are essential elements of transportation infrastructure, but are increasingly affected by ageing and deterioration mechanisms such as cracking. Regular inspections are required to ensure their safety, yet traditional manual procedures are time-consuming, subjective, and costly. Recent advances in mobile mapping systems and Deep Learning (DL) enable automated visual inspections. However, their effectiveness is limited by the scarcity of tunnel datasets. This paper introduces a new publicly available dataset containing annotated images of three different tunnel linings, capturing typical defects: cracks, leaching, and water infiltration. The dataset is designed to support supervised, semi-supervised, and unsupervised DL methods for defect detection and segmentation. Its diversity in texture and construction techniques also enables investigation of model generalization and transferability across tunnel types. By addressing the critical lack of domain-specific data, this dataset contributes to advancing automated tunnel inspection and promoting safer, more efficient infrastructure maintenance strategies.

CVChess: A Deep Learning Framework for Converting Chessboard Images to Forsyth-Edwards Notation

Nov 18, 2025Chess has experienced a large increase in viewership since the pandemic, driven largely by the accessibility of online learning platforms. However, no equivalent assistance exists for physical chess games, creating a divide between analog and digital chess experiences. This paper presents CVChess, a deep learning framework for converting chessboard images to Forsyth-Edwards Notation (FEN), which is later input into online chess engines to provide you with the best next move. Our approach employs a convolutional neural network (CNN) with residual layers to perform piece recognition from smartphone camera images. The system processes RGB images of a physical chess board through a multistep process: image preprocessing using the Hough Line Transform for edge detection, projective transform to achieve a top-down board alignment, segmentation into 64 individual squares, and piece classification into 13 classes (6 unique white pieces, 6 unique black pieces and an empty square) using the residual CNN. Residual connections help retain low-level visual features while enabling deeper feature extraction, improving accuracy and stability during training. We train and evaluate our model using the Chess Recognition Dataset (ChessReD), containing 10,800 annotated smartphone images captured under diverse lighting conditions and angles. The resulting classifications are encoded as an FEN string, which can be fed into a chess engine to generate the most optimal move

The CHASM-SWPC Dataset for Coronal Hole Detection & Analysis

Nov 18, 2025

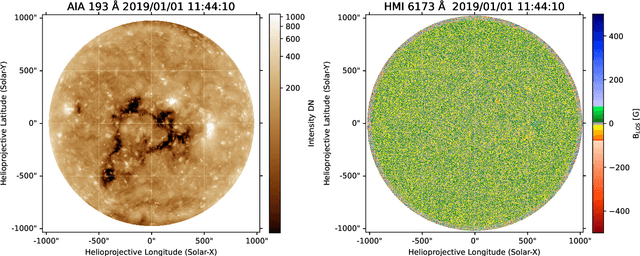

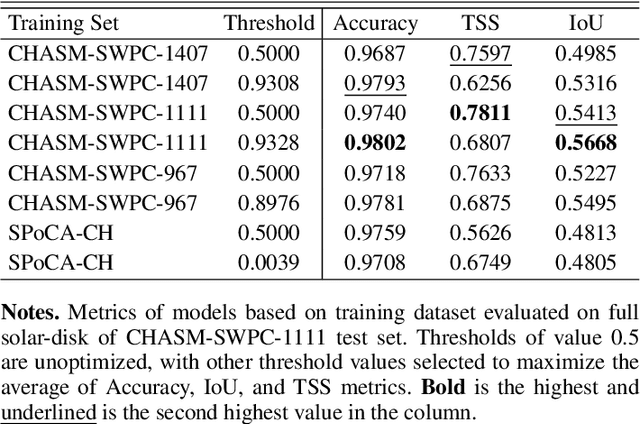

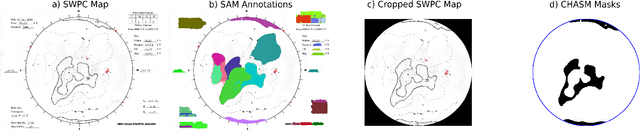

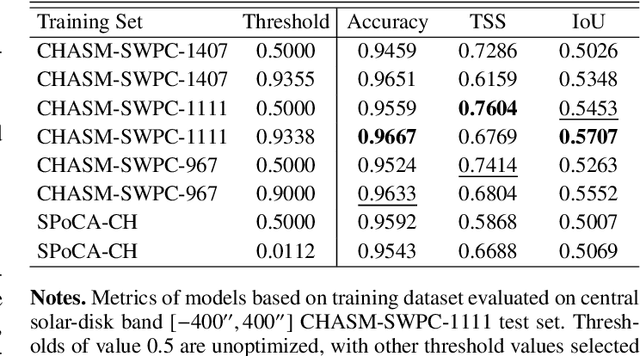

Coronal holes (CHs) are low-activity, low-density solar coronal regions with open magnetic field lines (Cranmer 2009). In the extreme ultraviolet (EUV) spectrum, CHs appear as dark patches. Using daily hand-drawn maps from the Space Weather Prediction Center (SWPC), we developed a semi-automated pipeline to digitize the SWPC maps into binary segmentation masks. The resulting masks constitute the CHASM-SWPC dataset, a high-quality dataset to train and test automated CH detection models, which is released with this paper. We developed CHASM (Coronal Hole Annotation using Semi-automatic Methods), a software tool for semi-automatic annotation that enables users to rapidly and accurately annotate SWPC maps. The CHASM tool enabled us to annotate 1,111 CH masks, comprising the CHASM-SWPC-1111 dataset. We then trained multiple CHRONNOS (Coronal Hole RecOgnition Neural Network Over multi-Spectral-data) architecture (Jarolim et al. 2021) neural networks using the CHASM-SWPC dataset and compared their performance. Training the CHRONNOS neural network on these data achieved an accuracy of 0.9805, a True Skill Statistic (TSS) of 0.6807, and an intersection-over-union (IoU) of 0.5668, which is higher than the original pretrained CHRONNOS model Jarolim et al. (2021) achieved an accuracy of 0.9708, a TSS of 0.6749, and an IoU of 0.4805, when evaluated on the CHASM-SWPC-1111 test set.

Pandar128 dataset for lane line detection

Nov 10, 2025We present Pandar128, the largest public dataset for lane line detection using a 128-beam LiDAR. It contains over 52,000 camera frames and 34,000 LiDAR scans, captured in diverse real-world conditions in Germany. The dataset includes full sensor calibration (intrinsics, extrinsics) and synchronized odometry, supporting tasks such as projection, fusion, and temporal modeling. To complement the dataset, we also introduce SimpleLidarLane, a light-weight baseline method for lane line reconstruction that combines BEV segmentation, clustering, and polyline fitting. Despite its simplicity, our method achieves strong performance under challenging various conditions (e.g., rain, sparse returns), showing that modular pipelines paired with high-quality data and principled evaluation can compete with more complex approaches. Furthermore, to address the lack of standardized evaluation, we propose a novel polyline-based metric - Interpolation-Aware Matching F1 (IAM-F1) - that employs interpolation-aware lateral matching in BEV space. All data and code are publicly released to support reproducibility in LiDAR-based lane detection.

POEv2: a flexible and robust framework for generic line segment detection and wireframe line segment detection

Aug 27, 2025Line segment detection in images has been studied for several decades. Existing line segment detectors can be roughly divided into two categories: generic line segment detectors and wireframe line segment detectors. Generic line segment detectors aim to detect all meaningful line segments in images and traditional approaches usually fall into this category. Recent deep learning based approaches are mostly wireframe line segment detectors. They detect only line segments that are geometrically meaningful and have large spatial support. Due to the difference in the aim of design, the performance of generic line segment detectors for the task of wireframe line segment detection won't be satisfactory, and vice versa. In this work, we propose a robust framework that can be used for both generic line segment detection and wireframe line segment detection. The proposed method is an improved version of the Pixel Orientation Estimation (POE) method. It is thus named as POEv2. POEv2 detects line segments from edge strength maps, and can be combined with any edge detector. We show in our experiments that by combining the proposed POEv2 with an efficient edge detector, it achieves state-of-the-art performance on three publicly available datasets.

TriLiteNet: Lightweight Model for Multi-Task Visual Perception

Sep 04, 2025Efficient perception models are essential for Advanced Driver Assistance Systems (ADAS), as these applications require rapid processing and response to ensure safety and effectiveness in real-world environments. To address the real-time execution needs of such perception models, this study introduces the TriLiteNet model. This model can simultaneously manage multiple tasks related to panoramic driving perception. TriLiteNet is designed to optimize performance while maintaining low computational costs. Experimental results on the BDD100k dataset demonstrate that the model achieves competitive performance across three key tasks: vehicle detection, drivable area segmentation, and lane line segmentation. Specifically, the TriLiteNet_{base} demonstrated a recall of 85.6% for vehicle detection, a mean Intersection over Union (mIoU) of 92.4% for drivable area segmentation, and an Acc of 82.3% for lane line segmentation with only 2.35M parameters and a computational cost of 7.72 GFLOPs. Our proposed model includes a tiny configuration with just 0.14M parameters, which provides a multi-task solution with minimal computational demand. Evaluated for latency and power consumption on embedded devices, TriLiteNet in both configurations shows low latency and reasonable power during inference. By balancing performance, computational efficiency, and scalability, TriLiteNet offers a practical and deployable solution for real-world autonomous driving applications. Code is available at https://github.com/chequanghuy/TriLiteNet.

Multi-View Camera System for Variant-Aware Autonomous Vehicle Inspection and Defect Detection

Sep 30, 2025Ensuring that every vehicle leaving a modern production line is built to the correct \emph{variant} specification and is free from visible defects is an increasingly complex challenge. We present the \textbf{Automated Vehicle Inspection (AVI)} platform, an end-to-end, \emph{multi-view} perception system that couples deep-learning detectors with a semantic rule engine to deliver \emph{variant-aware} quality control in real time. Eleven synchronized cameras capture a full 360{\deg} sweep of each vehicle; task-specific views are then routed to specialised modules: YOLOv8 for part detection, EfficientNet for ICE/EV classification, Gemini-1.5 Flash for mascot OCR, and YOLOv8-Seg for scratch-and-dent segmentation. A view-aware fusion layer standardises evidence, while a VIN-conditioned rule engine compares detected features against the expected manifest, producing an interpretable pass/fail report in \(\approx\! 300\,\text{ms}\). On a mixed data set of Original Equipment Manufacturer(OEM) vehicle data sets of four distinct models plus public scratch/dent images, AVI achieves \textbf{ 93 \%} verification accuracy, \textbf{86 \%} defect-detection recall, and sustains \(\mathbf{3.3}\) vehicles/min, surpassing single-view or no segmentation baselines by large margins. To our knowledge, this is the first publicly reported system that unifies multi-camera feature validation with defect detection in a deployable automotive setting in industry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge